[et_pb_section fb_built=”1″ admin_label=”section” _builder_version=”3.0.47″][et_pb_row admin_label=”row” _builder_version=”3.0.48″ background_size=”initial” background_position=”top_left” background_repeat=”repeat”][et_pb_column type=”4_4″ _builder_version=”3.0.47″][et_pb_text admin_label=”Text” _builder_version=”3.21.4″ background_size=”initial” background_position=”top_left” background_repeat=”repeat” hover_enabled=”0″ header_font=”||||||||” header_2_font=”||||||||” header_2_font_size_last_edited=”on|phone” header_2_font_size=”28px” header_2_line_height=”1.1em”]

Get Google To Index URLs & Discover Penalties In The New Search Console

Google recently sunset the old version of their Search Console tools and migrated all webmasters over to the “new version” of Search Console. One of the features missing from the new version of Google’s webmaster tools is the “Fetch As Google” function underneath the Crawl tab. This was how webmasters could request that Google crawl and index new URLs on their site. However, websites can still do this in Search Console. It just works differently now.

Below, I’ll walk through how any webmaster can setup Search Console and request that Google crawl and index URLs on their website. I’ll also show you how to find penalties that Google might have levied against your website.

This can potentially help websites ensure their content ranks faster, but more importantly, it can help them ensure all their URLs are indexed in Google Search.

Watch a video of me briefly walking through this process

Setting up Search Console to crawl and index website URLs

Hopefully, you’ve already set up Google Search Console on your website and visit it regularly. It’s a great hub to check for helpful information about the way Google sees your website.

We wrote a good article on some of the helpful modules inside of Search Console here. The article talks about how to use this data to improve search results.

If you don’t have Search Console set up yet, you can click this link and follow the easy-to-use wizard.

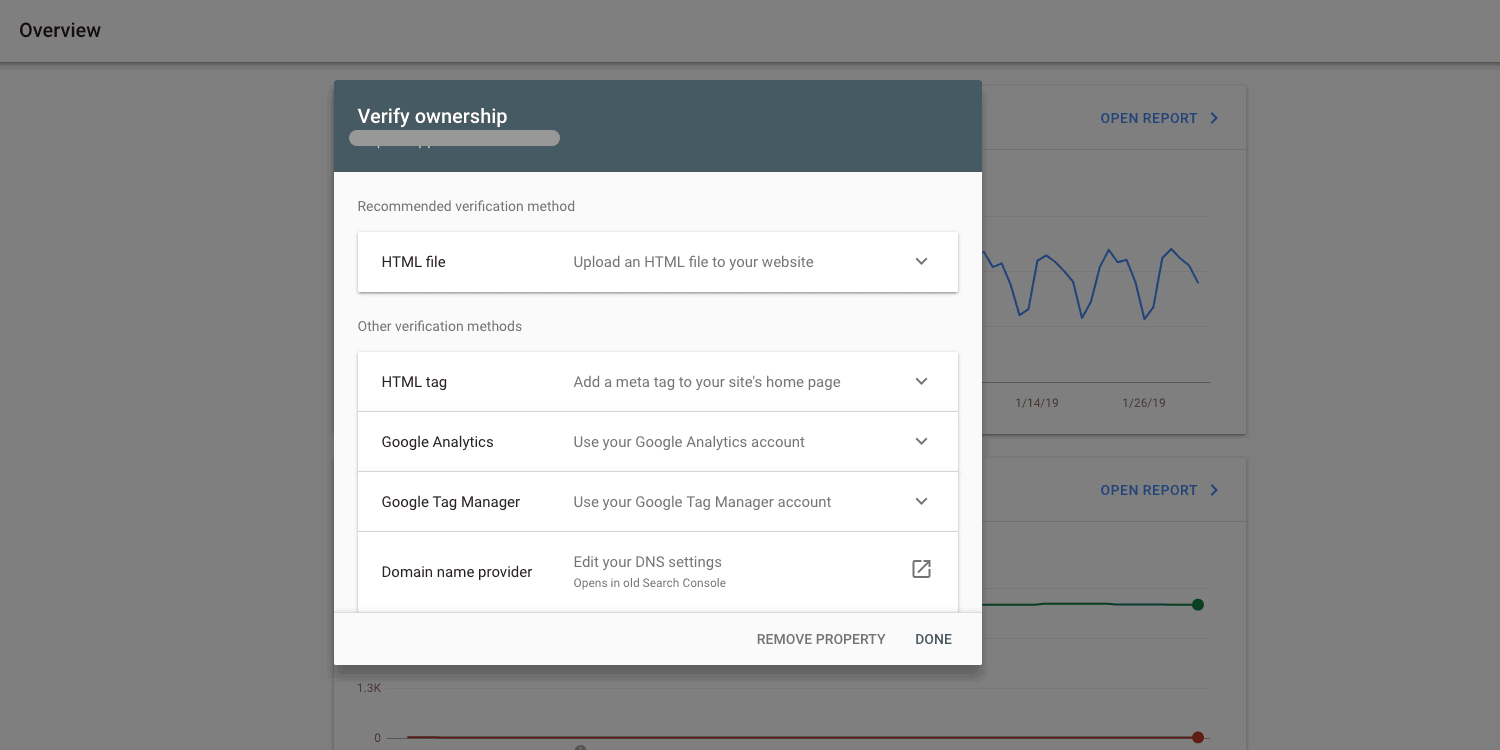

It is very easy to verify your site with Search Console. The easiest way is by connecting it to your Google Analytics account, but if it’s not connected to your Gmail account, you can simply use the HTML tag option and throw a small line of code into the head of your website. Once the code is added, the site can be verified immediately.

Doing “Fetch as Google” in New Search Console

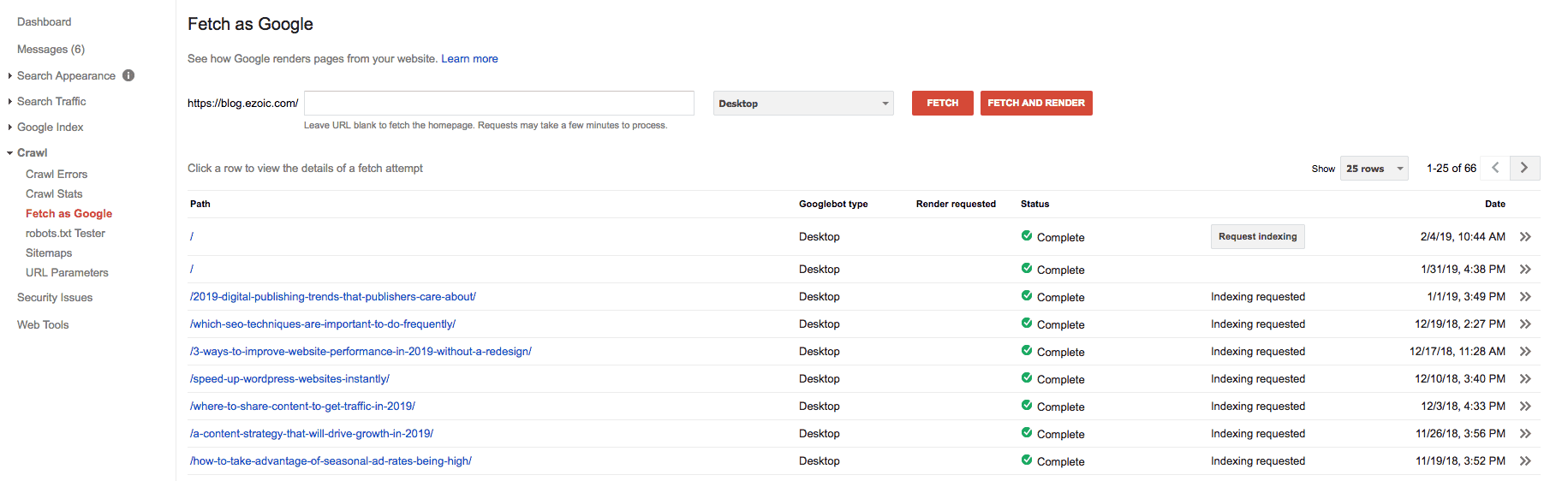

One of the most important and commonly used features inside of the old Google Search Console was the ability to have Google test, render, crawl, and index any URL that you submitted through the tool.

Why this was great: You could have Google simply crawl a single URL or crawl a URL and all linked pages (often the majority of your site). If the URL wasn’t blocked (the tool would tell you if it was), Google would allow you to index the URL(s) quickly and they would typically appear in Search in approximately 24 hours. This allowed publishers to have new content indexed in Search immediately; while ensuring that their URLs were able to be indexed in the first place.

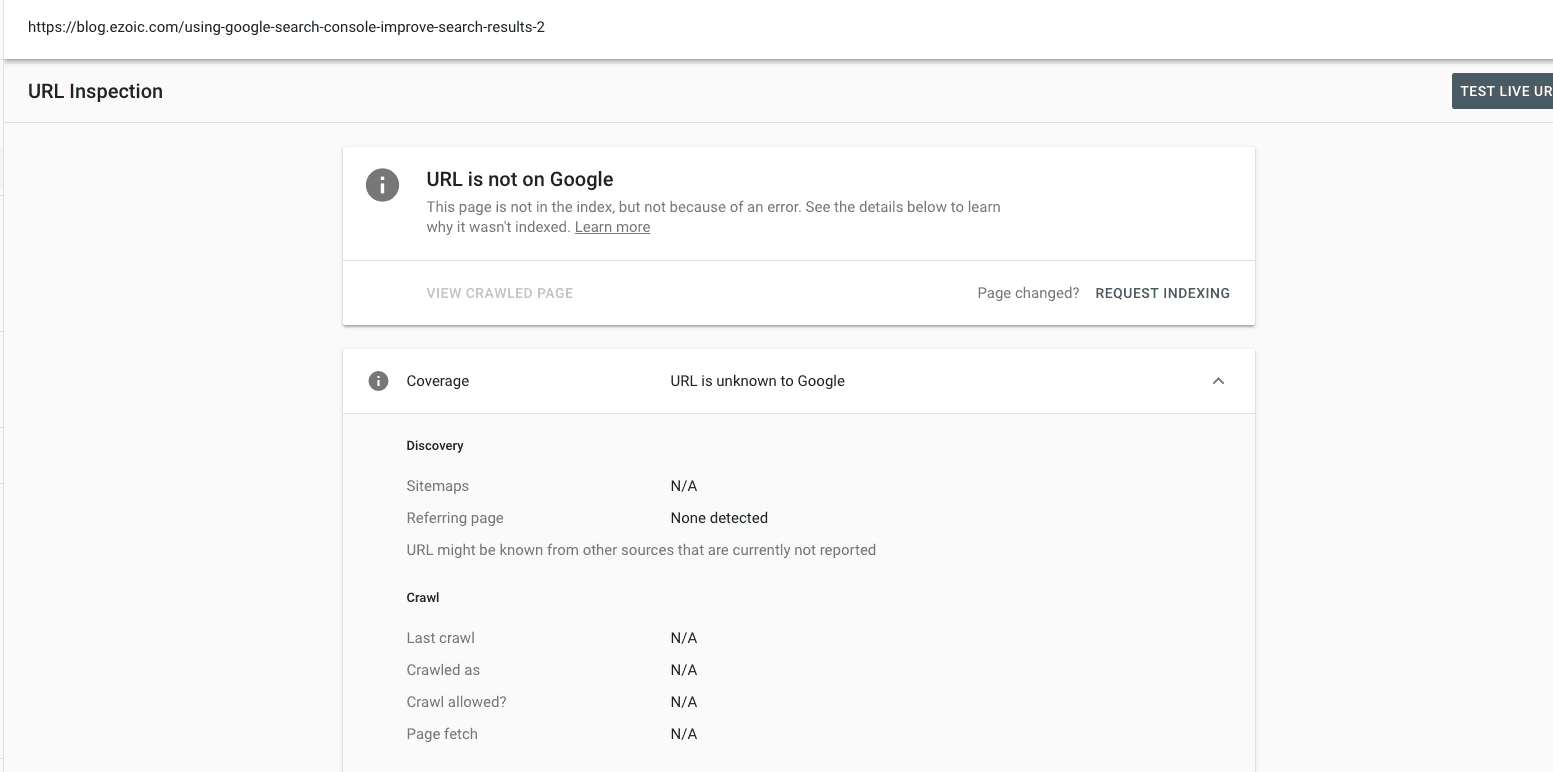

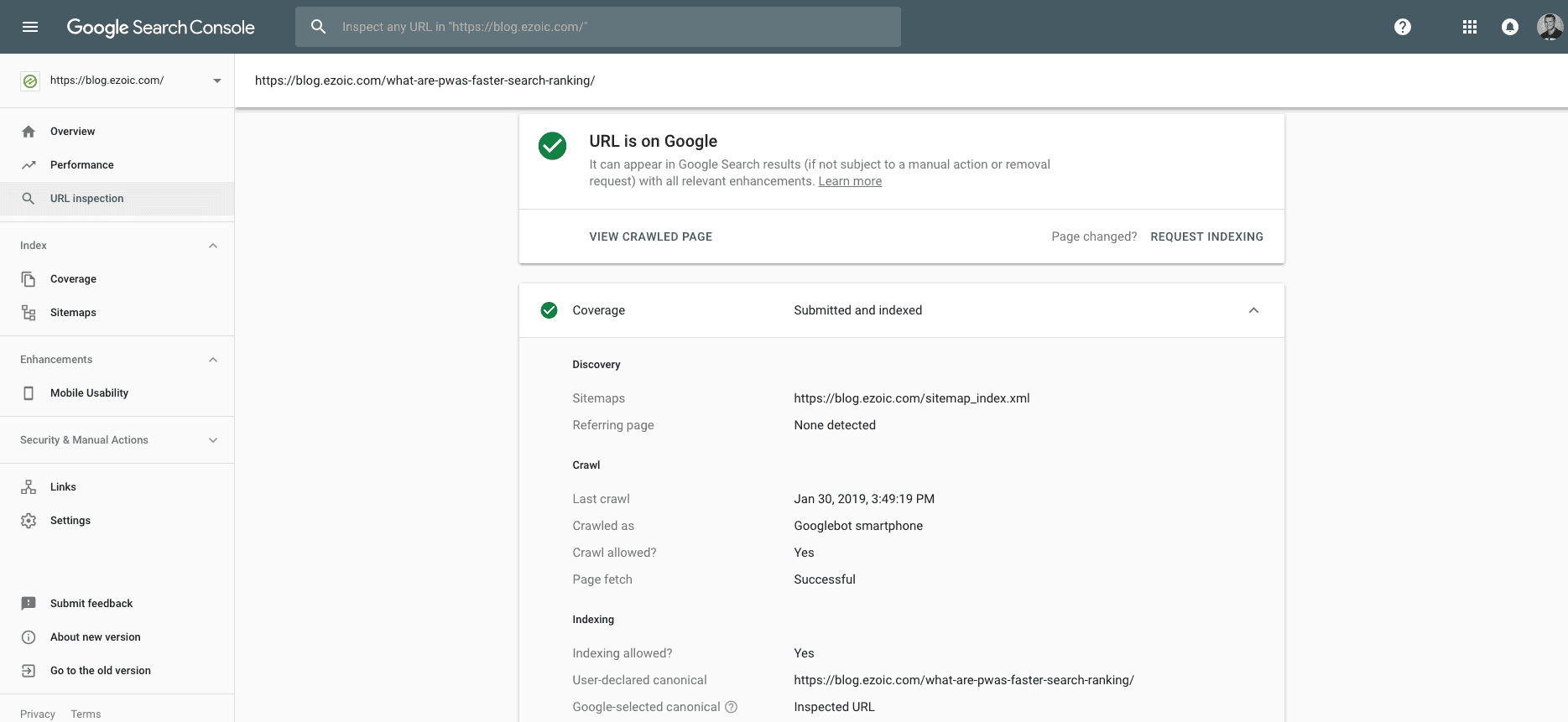

How you can still do this: You can see me walking through it in the video at the top; however, it’s actually really simple. At the top of the page inside of the new Google Search Console, there is a bar that says “Inspect any URL in https://yourwebsite.com”. Simply put any URL from your website into that tool and press “enter” (see GIF below).

If the URL is brand new, Google will crawl it and let you know if it can be indexed or not.

Since is it new, it will show up as not in Google’s index and you can then click on “Request Indexing”.

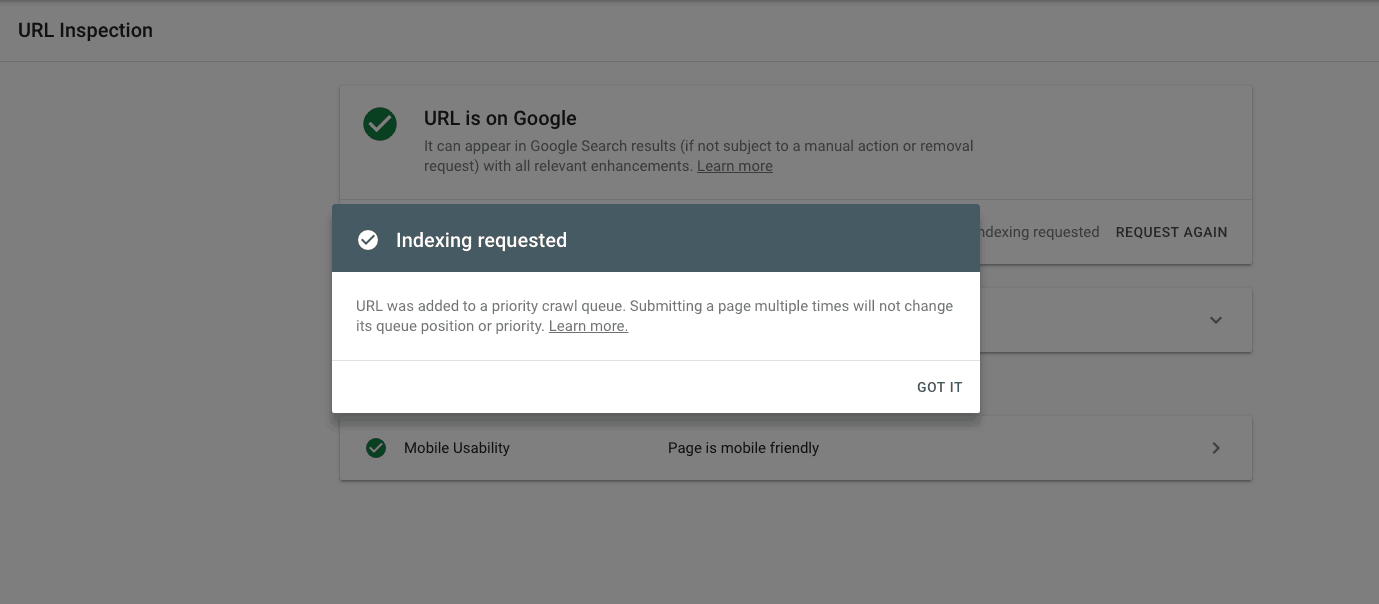

Google will then run a test to ensure that the URL can be indexed properly. Afterward, it will say “URL submitted to index”.

How long until Search Console tells Google to index the URL? While even Google won’t commit to a specific timeframe, in most cases URLs that are submitted to Search Console begin appearing in Google Search within 24 hours. Sometimes it can take slightly longer, but most of the time it takes less than 24 hours.

How long until Google crawls the URL if it isn’t submitted in Search Console? The fastest way to have new content rank — or even appear at all — inside of Google Search is to request Google to index it via Search Console. Without your request, it is possible that it takes days or even months before Google indexes a new URL. It depends on a lot of factors that Google does not readily share. That being said, many websites will notice that Google has indexed their new URLs within hours of publishing despite the fact that they haven’t submitted the URL in Search Console.

Will having Google crawl and index my page through Search Console help with rankings? No. Simply put, it will not help the content rank any higher for specific keywords simply because you submitted a URL to Search Console. However, this practice can help you ensure that the URL is not blocked and is able to be indexed by Google. It can also help it appear faster in Google Search than by waiting for Google to crawl it naturally.

Should I have Google crawl pages or articles when I update them?

Yes!

You should definitely have Google re-crawl or re-index a page or post after you update it.

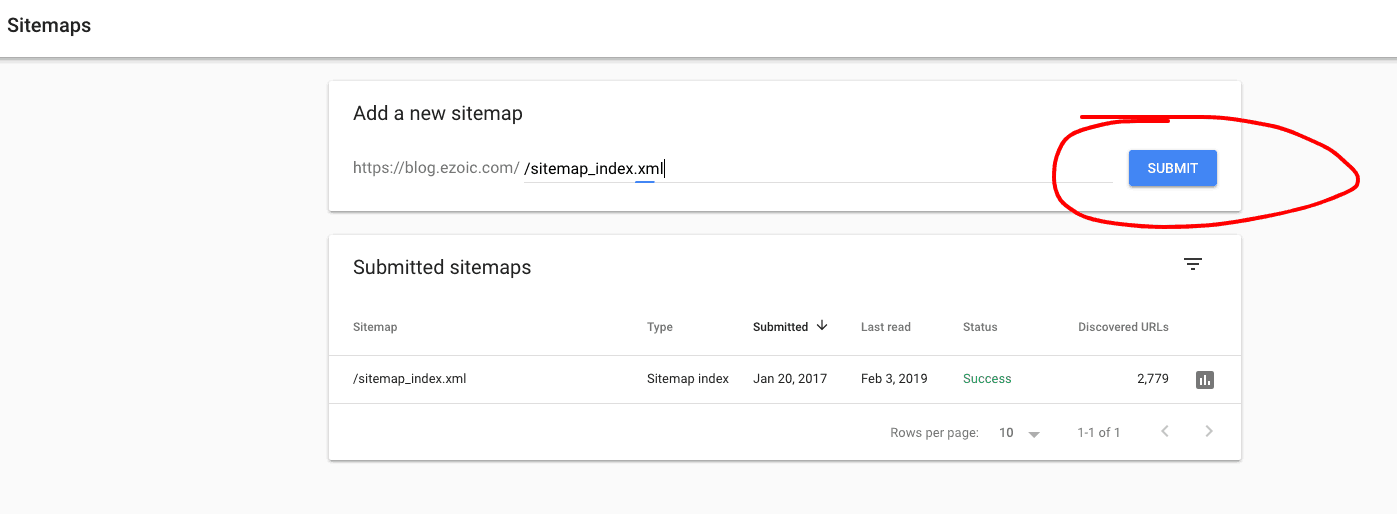

It is really hard to say how often Google may be crawling different parts of your site. Adding your site-map to Search Console can actually help Google do this better automatically. This is actually something that Google recommends as a best practice for SEO.

If you actually look at your server weblog files, you could see this on your own; however, almost no one does this anymore. Unless you’re a total geek, it’s not really all that necessary either.

Outside, of giving Google the best understanding possible of how your site architecture and hierarchy are outlined in your site-map, anytime you make updates to part of your site you should have Google re-crawl the page and index it again.

Throwing in an updated page’s or article’s URL into the new Search Console Inspect URL tool will allow you to see all Google’s indexing stats on the page. Then, you can request that Google index the page again just like how you would with a new URL.

This will ensure that Google Search is indexing your pages or articles based on the most recent updates. This is important if you have recently added or improved the content on a page in an attempt to make it rank higher.

Is Google penalizing my site for any reason?

A common phrase I often hear from webmasters and publishers is … “Google has penalized our site because we have noticed a loss in rankings.”

First, it is always important to double-check an organic traffic drop for all the important signals and factors to better understand what you’re dealing with.

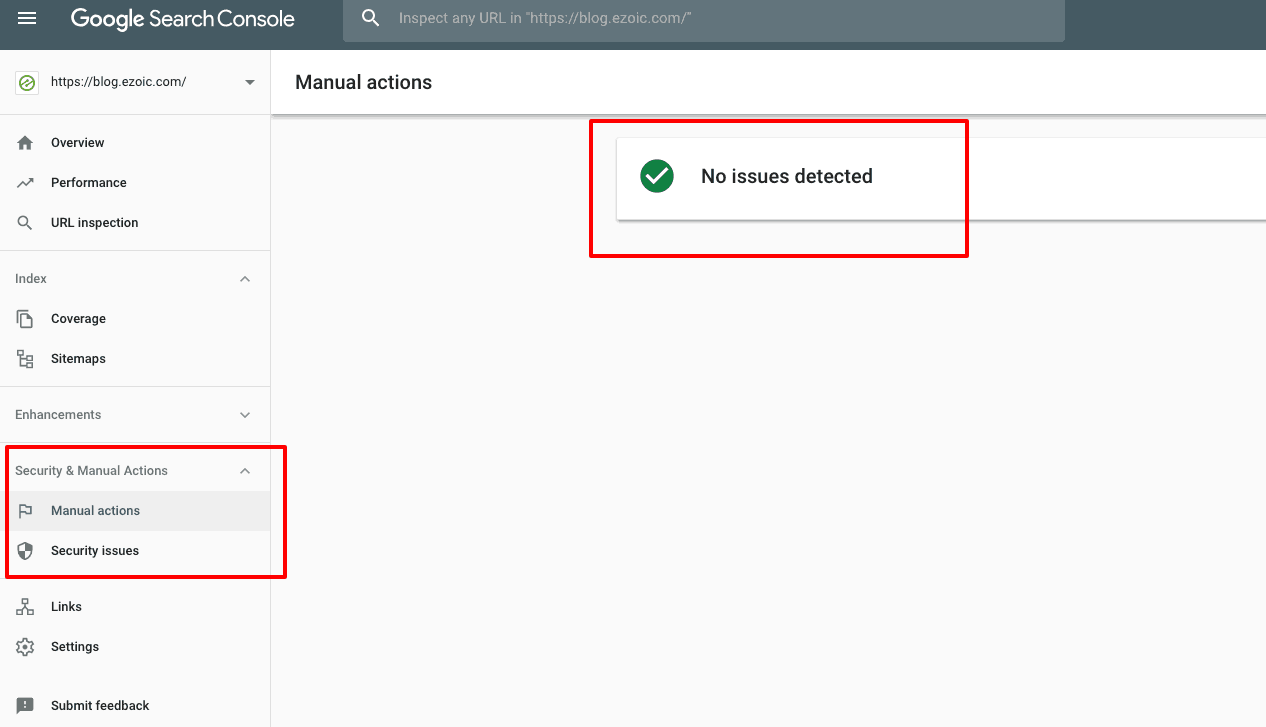

Second, if Google has actually penalized your site (ad-heavy, web spam, the site was hacked, etc.) they will let you know most of this inside of Search Console or Webmaster Tools.

One of the most common things I’ll hear from publishers is that they believe that too many ads are affecting their SEO. While ads can affect the visitor’s experience, and this DOES impact SEO if it is not managed properly, Google is actually really clear when they would penalize or rank a site lower because of being ad-heavy.

You can actually check this report on your site inside of Search Console by clicking this link.

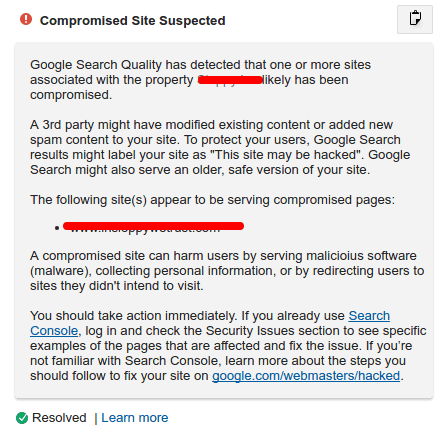

Furthermore, manual penalties are easily shared with webmasters inside of Search Console. These could be the result of a manual reviewer finding something on your site that clearly violates webmaster guidelines. They are usually pretty clear about what it is they’d like to have you fix.

Lastly, if your website has been hacked or compromised, Search Console is often the first to warn you. If it is set up properly, you will get emails about potentially hazardous behavior occurring on your site.

Often, Hackers will proxy the website so that the website owner, and even the vast majority of the visitors, won’t see the hacked version of the site. This allows them to infiltrate the site and run malware to a small sub-section of visitors unnoticed. Google Search Console will show this to you inside the interface often before anyone else is able to notice.

But, I lost ranking and don’t see any penalties. What happened?

Rankings and organic traffic are definitely correlated, but they aren’t completely inter-connected. You can lose organic traffic without losing any rankings.

It might seem hard to believe but these things aren’t as easy as you might imagine. If you’re traffic drops, it can be a lot of different things causing the drop. What’s more, losing traffic could be just as much about the way Google Search is changing as it is about another site’s content ranking above yours.

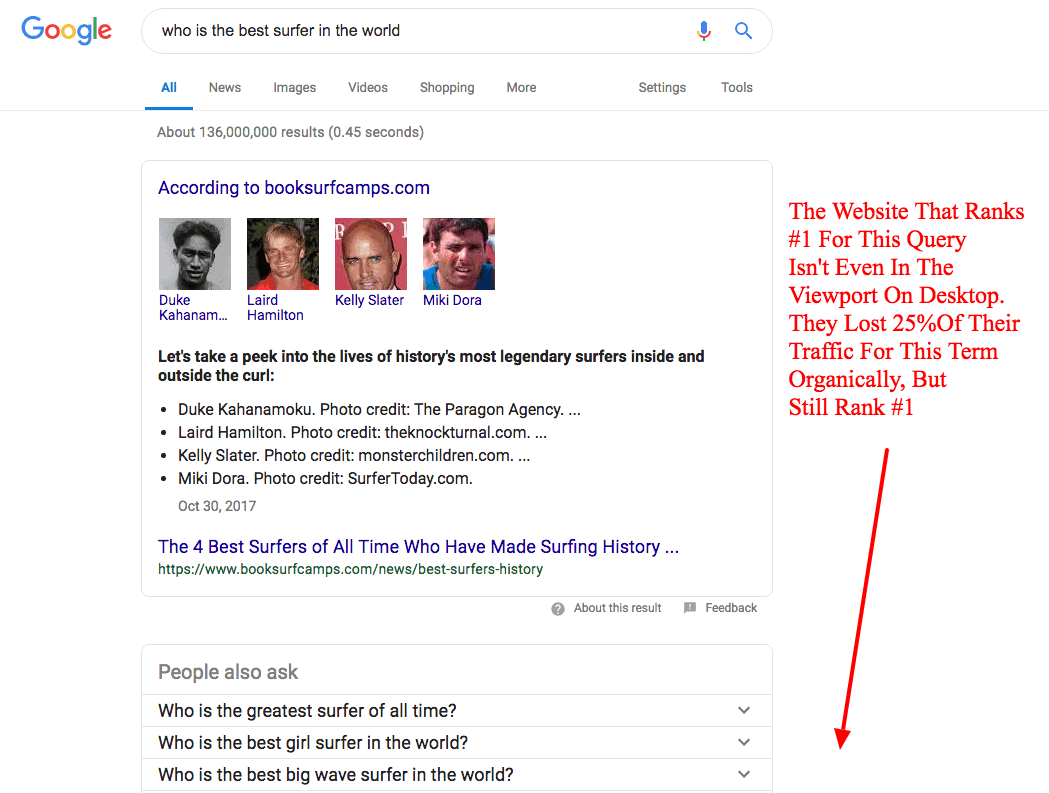

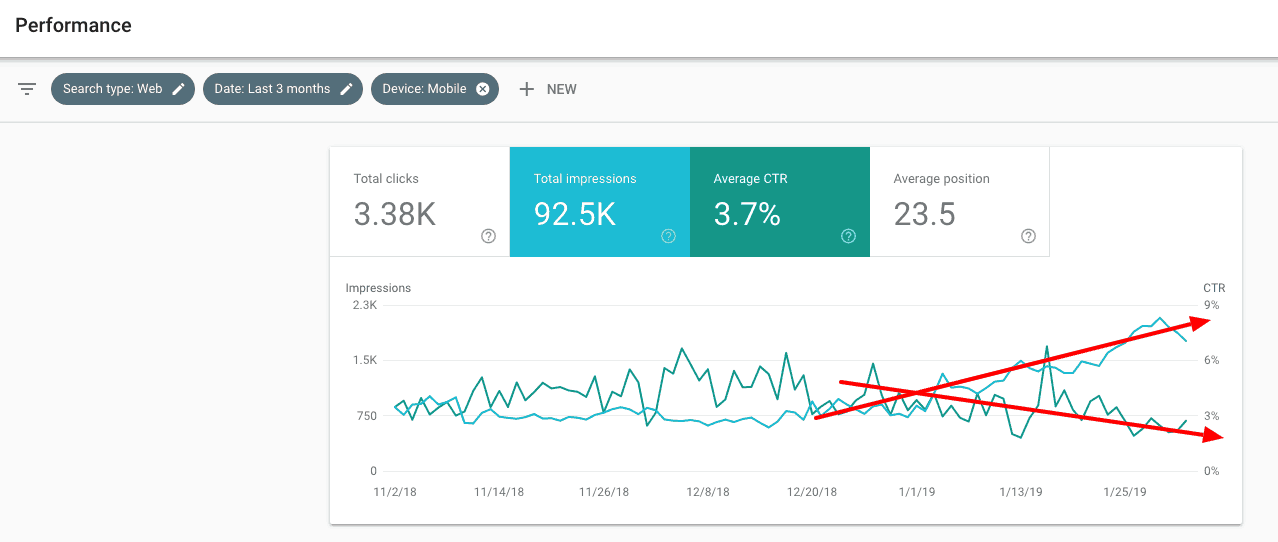

Many times, we are seeing more and more sites that have ranked well for evergreen keywords (i.e. “King Henry The IV) see a drop in organic traffic without any loss in keyword rankings. With Google’s Knowledge Graph and other rich snippets being more prominently featured in more result, typical organic results are seeing a varied performance.

That’s why looking at things like impressions, average ranking position, and click-through-rate inside of Search Console can be helpful.

Ultimately, if you have in fact lost true keyword rankings, clicks from Google search (organic traffic), and are concerned about why Google is ranking your content lower than it used to it’s worth looking at three things.

What to do to improve rankings if you don’t have a penalty:

- Schema mark-up so that you can appear in more rich results inside Google Search

- Improve “search relevance” for your declining content

- Explore existing and new keywords that are good bets for delivering more organic traffic

What should I do next inside the New Search Console?

For now, it’s good to check it regularly. Google has added a lot of helpful performance stats and coverage reports to help you understand how Google is viewing your site, how it is performing in Google Search, and if there are any errors or penalties that are worth fixing on your site.

By monitoring Search Console and regularly updating your site-map, submitting new URLs, and having Google re-index updated pages or articles you can ensure that your website is primed for success in Google Search.

While the world of SEO can be daunting and complex, Search Console is a source of truth directly from Google regarding your website. It is a tool that should be the first place you come to examine issues with your organic traffic.

Thoughts, questions, or comments? Leave them below.

[/et_pb_text][/et_pb_column][/et_pb_row][/et_pb_section]

Great read thx , lots of people just dont get the incredible data that Google provides through GSC. IMHO way better than analytics

Such a great info! Thank you for sharing this post

Hi! Really interesting post! I actually launched a site 4 days ago, and Google hasn’t indexed yet. I tried requesting indexing, but I get a message of “Requesting Indexing Issues” I just submitted a sitemap…since I read that can help. Automatically the sitemap submitted got Succesful status…But still I’m getting the same indexing error. I checked on Security issues section on the left…ANd there’s none..All correct….

I do know that Google can take some days to Index a site…But it’s the fact that won’t even let me submit the indexing request that worries me…Any idea of what the problem could be? Thankas a lot!!! 🙂

Is Search Console set up? If so, when you crawl a URL and click, “submit to index”, what does it say?

Nice information. i was looking this information since google webmaster update itself.

thank for sharing

Thanks for sharing about the indexing new Url and how to check the penalties.. some time you blog can be penalized manually…

Thanks Tyler,

Nice and great information revealed.

Recently I had site coverage issues, which made me sort a solution.

I even got to know that it’s a general problem from Google as lot if webbers have related issues.

This information helped in a way.

Thanks for sharing.

Google’s new search console is an absolute gem for the digital marketing community. It is vital for each and every SEO professional.

Google has some great tools and the search console is no 1 on my list. Having your linked submitted almost instantly is remarkable.

I have registered my new domain and today I wanted to index it by google web master tools but I get error that it has been rejected, I am surprised, and checked manual actions but no issue there. Its a new site not yet designed also so could that be the reason google not want to index it?

It must be published to the web and be indexable (sitemap?)

how many times can you request this per day in the new console? does it still have a limit like old console?

There’s no expressed limit; however, crawl budget is something to look into if your site is requesting thousands+ crawls a day.

Can we check if our domain ever penalized before? I bought Expired domain and when I submit sitemap it’s showing couldn’t be fetched and many of URL indexed in google. Currently, there is no penalty showing in webmaster tool. Before daily crawl pages are above 1500, How Can I control crawls?

GSC is an excellent tool if you know how to use 100% of what it has to offer.

Thx for this article, really helped me realize a few things I had forgotten about.

Does the “URL inspection” crawl the page only or the page and all the links?

It crawls that URL, but keep in mind Google will see all links no matter what.

hi tyler, why googlewebmaster cant access not, can’t verify in webmaster. i cant crawl my url

any suggestion?

Go through the steps to verify domain access via Search Console

Google is really wilding these days

Thanks for taking the time to share this valuable information. I am now making it my daily habit to submit links to search console after publishing on my site. I think the information found under the search console is more accurate and helps to help rank websites.

What happens if you keep submitting your uRL for indexing. Is there a penalty of some sort?

Hi Seema,

There’s no penalty for asking google to Index / Re-crawl your site. Hope this helps!

Thanks for the update! do think Google is getting more complicated with it crawl and search engine platforms this day’s reason is that they have been some couple of update going on without 78% knowledge of online web masters ad publisher