[et_pb_section fb_built=”1″ admin_label=”section” _builder_version=”3.22.3″][et_pb_row admin_label=”row” _builder_version=”3.22.3″ background_size=”initial” background_position=”top_left” background_repeat=”repeat”][et_pb_column type=”4_4″ _builder_version=”3.0.47″][et_pb_text admin_label=”Text” _builder_version=”3.22.7″ background_size=”initial” background_position=”top_left” background_repeat=”repeat” text_font=”||||||||” header_font=”||||||||” header_2_font=”Oswald Light||||||||”]

Website Visitor Segmentation Offers Big Ad Earnings Reward For Little Work

In the world of online publishing, there is always a search taking place for small efforts that can deliver a lot of value.

The industry is awash with stories of publishers who changed something simple like a menu location or layout and dramatically improved their earnings or visitor experiences.

But, what if there was one obvious thing that most publishers were missing that provided more value than just about anything else they could do?

This is exactly what Iggy Chen, the head of business intelligence at Ezoic, discussed in his recent case study at Google in New York during Pubtelligence.

Iggy highlighted the tremendous value that website visitor segmentation brings to digital publishing. He showed why simple ways of treating visitors differently offered dramatic long-term revenue increases.

Below, I’ll share his findings and examples and provide some details on how you can do this on your web properties.

Understanding website visitor differences

All website visitors behave differently. Their behavior and their willingness to engage in a site’s content directly controls the amount of revenue earned on the website.

Even without direct advertiser deals, publisher earnings are affected by their audience’s behavior. Inside of programmatic exchanges, visitor engagement has a direct correlation to the amount of revenue advertisers bid (or don’t bid) for publisher inventory.

Additionally, how the visitors browse pages on a publisher website directly affects the number of ad impressions shown per page; affecting the publishers EPMV (earnings per thousand visitors— or session revenue).

We covered this in a deep study here.

With that in mind, do we simply throw our hands up and say, “well my audience’s behavior or engagement must just be like the weather, something unchangeable and something without the ability to be manipulated”?

Of course not.

The truth is that we all know that changes we make on website pages can directly affect how many pages a user visits, how long they spend on the site, if they elect to return, and more.

Iggy said this was like comparing groups of fantasy football participants inside his company, Iggy mentioned that football fans joined the fantasy football league, but that non-football fans created their own league.

Both leagues had vastly different behavior in how they followed football during the season.

Iggy’s case study follows how website visitor behavior can be grouped in a similar fashion.

What Iggy’s case study shows is that the real value in making these changes isn’t trying to find the single impactful change for everyone, but rather, what small changes could be made for different kinds of visitors that collectively enhance things like pageviews per visit, engagement time, and bounce rates as a whole?

Watch Iggy’s full presentation here

Case study on website visitor analysis and optimization

The case study presented at Google was from the publisher, TigerNet.

Tigernet is an online community for Clemson University sports fans. It has a very large contingent of loyal readers; as well as casual fans from the larger college sports landscape.

In the same way that Iggy drew comparisons between the different behaviors of internal office fantasy football team participants, he claimed that all sites — Tigernet included — had visitor segments of Casual users and Superfans.

He gave the caveat that there are actually thousands of segments, but the for the sake of his study, he was going to be looking at two distinctly different groups of users and how treating them differently offered big financial value.

The result of treating TigerNet visitors differently

First, let’s examine how the case study was conducted.

TigerNet leverages the Ezoic machine learning platform for visitor learning. The platform has machines that learn from visitor behavior over time and adjusts things like ad placements, types, and density to maximize engagement and session length.

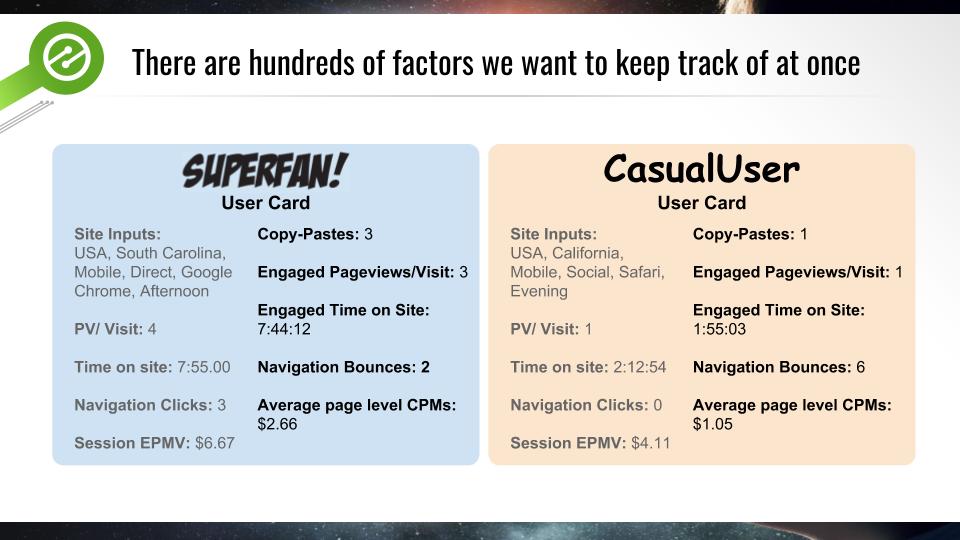

In this example, we are looking at two specific types of visitors Ezoic identified over time. Iggy uses the terms he referred to earlier, Superfans and Casual Users.

We can see some of the attributes of the Superfan above. They are from the state Clemson is in, usually are a return visitor, often browse on mobile, and check the site often.

The study examines how the machines treated these users differently based on their previous reactions to a whole host of variables.

Because this is the home page of TigerNet, the older articles are lower down on the page. The machines elected put get two ads around where Superfans typically focus, at the top.

It can be assumed that the machines learned that Superfans are well acquainted with older Clemson news; thus the best likelihood of displaying highly viewable ads comes in these locations.

As previously discussed, putting ads in places where visitor engagement is high can often provide higher value for those ads, but has to be weighed against things like their ability to increase bounces and shorter sessions.

As the Superfan moves from the homepage to their next pageview, the machines only show them two more ads totaling 4 for the session.

Previous data shows that 2 ads are typically the threshold for a higher number of total pageviews for this type of visitor on their second pageview. For this reason, the machines kept ad density below this threshold to ensure the session does end sooner than normal.

Finally, you’ll notice that this last ad is at the very end of the article. Since previous data shows that the user will most likely want to read the entire article, the machines put the high-value ad at the end of the content; instead of in the middle of the page where it would interrupt their flow especially since they’re unlikely to stop in the middle and click on the ad.

This keeps ad value higher on the site; as fewer advertiser ads are ignored or counted as non-viewable. This helps keep bid competition on the site higher for ad space on this page while also maximizing the value of this individual session.

Understanding how this visitor behaves allowed the machines to fairly distribute the ads across the entire session; maximizing earning and the user’s experience.

It also was able to keep ads in places that prevent ad value dilution.

Treating the “Casual Users” differently

Now, let’s take a look at how the machines used previous visitor behavior information to treat another visitor segment differently.

In this case, we are looking at the Casual User. The machines have learned that visitors with these attributes — not from Clemson home state, coming from a social site, in the evening etc. — typically have a far shorter session.

For this reason, the content is monetized differently; as it is rare that these visitors visit more than 1 or 2 pages.

Since these visitors are typically skimming the site, the machines place the higher value ads at the top of the article pages where they are most likely to be viewed; as the machines have learned that this visitor does not always make it to the bottom of the page.

This approach allows the machines to keep EPMV (total session revenue) higher than it would have if they would have kept the same static ad locations as they delivered to the Superfan.

By treating these two users differently, the machines were able to keep total EPMV for both user sessions about 45% higher than they would have if they would have delivered both users the same ad placements and density.

What else can machines tell us about website visitor behavior?

Part of how machine learning works, in this case, is that the machines themselves start to work out what factors ultimately are most important to ad value and user experience on each individual website.

In this case, we can see a few factors that have proven to be worth tracking.

These two visitors are vastly different and the machines have learned what attributes best classify them both.

This includes tracking and understanding these metrics for different visitors:

- Engaged pageviews per visit

- Engagement time

- Navigation bounces

- DOM Interactive and DOM Complete

- Return visitor rate

- Copy/paste per visit

- Share interaction rate

- Page scroll percentage

- RPM by page length

- EPMV by landing page word count

- and many others…

These metrics all provide a much more granular level of data around how visitors are actually engaging with a site’s content.

This allows publishers to take a long-term view of monetization and maximize returns over time.

This prevents the extremely common problem of “over-monetization” that is causing many publisher ad rates to decline.

You can track all of these for free in the new Ezoic Big Data Analytics suite; which publishers can access at no cost.

The power of adapting to website visitor data in real-time

Beyond the ability to segment visitors and treat them differently, Iggy’s case study seemed to also show some pretty strong data on the benefit of doing this in real-time as well.

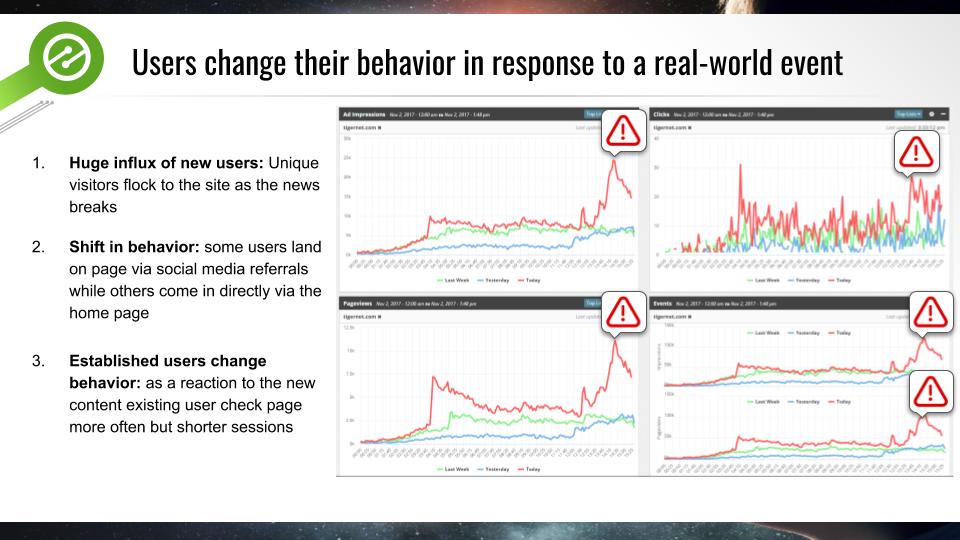

In one example, he showed how the machines quickly adapted to a surge of new visitors.

This surge of new visitors was directly related to the injury of the star Clemson kicker, Greg Huegel.

As machines parsed the data they were able to see that the behavior of users shifted from the norm during this event.

There was a large influx of new unique users coming in from social media as people shared the news via Facebook, Twitter, and Reddit.

These are users who have either never been to the site before or have been but don’t visit frequently.

So if we look at the SuperFan and Casual Users again, we can see that there are big differences in how we can treat these visitors in this scenario, and there are definitely some scenarios that are more optimal than others.

For the SuperFan coming into the article, the machines have learned that they’re likely to continue on browsing so they spread out the ads across their entire user journey resulting in fairly consistent page level CPMs.

On the other hand, for a Casual User coming into the article, the machines concentrate more ads on the page since they have learned that they’re most likely only staying to read the single page, then leaving.

Digging deeper, if we look at the SuperFan coming into the home page instead of the article page, the machines still show them a couple ads because they know they’re familiar with the layout and willing to put up with these ads in order to find the content that they’re looking for.

When we compare these two types of users they have very different optimal EPMVs and the publisher has to not only guess correctly what type of user a visitor is but also show them the right pages to maximize their EPMV otherwise they’re leaving money on the table.

That’s where having machines learn and filter some of this data makes a lot of sense.

User behavior doesn’t form a discrete binary population

Iggy Chen points out that every website has its own version of these two types of visitor segments. He also shares that there are likely thousands of other visitor segments.

He discussed how visitors can be lumped by two different kinds of data.

Things publishers can affect:

- Navigation options present (sidebar, menu, links)

- Page load speed – critical path rendering

- Content positioning

- Ad Types (display, native, link, video)

- Ad Locations (where on the page)

- Ad Sizes

- Presence of other ads and their location

Things publishers can’t affect but must account for:

- OS or Device Type

- Browser type

- Time of day / day of week

- User connection speed

- Geographic location

- Upstream traffic source

- UX metrics from other users visiting this page

- Subscriber or non-subscriber (potentially)

- Actual user history

- and much more

Meshing these pieces of data together will allow you to begin dividing up different visitor segments. Every single one will behave differently, according to Chen.

What’s the ad earnings value of doing this?

Sticking with our TigerNet case study, we can see that they have seen significant revenue increases, improved user experience metrics, and more pageviews.

By identifying and tracking user behavior, the case study shows that publishers can leverage technology to help optimize decision-making in order to improve user experiences and increase total user value.

This is a new element for publishers; as hand-tuning, these variables would be great, but cannot be scaled. Making this an optimal approach for publishers and ad ops professionals alike.

At the end of the day, treating each user appropriately means happy users over the long term and happy users means happy publishers.

Wrapping it up

Interested in trying something like what TigerNet did on your site? Ezoic is a platform that publishers can use for free to test and try things like this on their own.

Questions or comments about this case study? Leave them below. Iggy and I will both respond.

[/et_pb_text][/et_pb_column][/et_pb_row][/et_pb_section]